Table of Contents

- Key Benefits:

- Understanding Web Crawling Basics

- What is Web Crawling and Its Challenges?

- How a Web Crawling API Simplifies Data Extraction

- Features of a Web Crawling API

- Scalability and Reliability of APIs

- Using WebCrawlerAPI: A Practical Example

- Code Example: Data Extraction with WebCrawlerAPI

- Customizing API Requests

- Benefits of Using a Web Crawling API

- Efficiency and Scalability

- Reliability and Accuracy

- Flexibility and Customization

- FAQs

- What is the difference between API and web scraping?

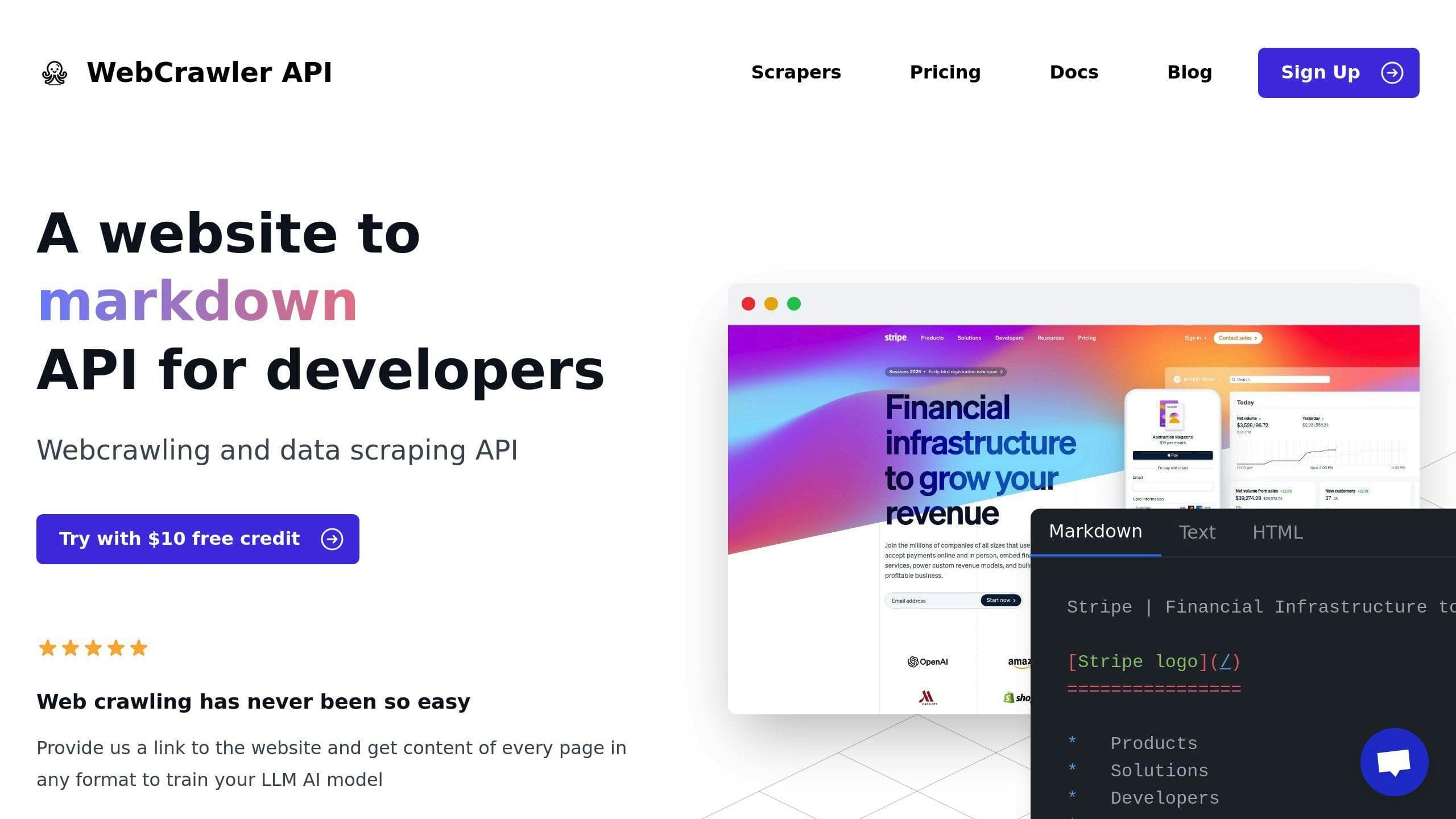

A web crawling API is a tool that automates the process of collecting data from websites, saving you the effort of writing complex code. It handles challenges like bypassing anti-bot systems, managing large-scale data, and rendering JavaScript-heavy pages.

Key Benefits:

- Simplifies data extraction: Extracts data in formats like JSON, HTML, Text, or Markdown.

- Handles technical hurdles: Bypasses CAPTCHAs, rotates proxies, and mimics browser behavior.

- Scalable and reliable: Processes large volumes of data efficiently with error handling.

For example, APIs like WebCrawlerAPI let you focus on analyzing data instead of worrying about infrastructure or anti-scraping techniques. Whether you're tracking competitor pricing or aggregating data for AI models, these APIs make it faster and easier.

Understanding Web Crawling Basics

Web crawling is the automated process of scanning and indexing web pages by systematically browsing them and following links. Think of it as a tool that maps out the web, downloads pages, and organizes the data into an interconnected structure.

What is Web Crawling and Its Challenges?

Web crawling involves scanning and processing web content, but it comes with its fair share of hurdles:

- Anti-bot defenses: Tools like CAPTCHAs and IP blocking are designed to stop automated access.

- Dynamic content: Pages that load content through JavaScript require more advanced handling.

- Data volume: Extracting and managing large-scale data responsibly can be overwhelming.

- Frequent updates: Websites often change content, making it tricky to keep data current.

These challenges are why many developers rely on web crawling APIs. These APIs handle the heavy lifting - like bypassing technical barriers and managing infrastructure - so developers can focus on analyzing the data rather than worrying about how it’s collected.

Specialized web crawling APIs have become essential for tackling these issues. They offer scalable, efficient solutions while adhering to website policies and technical standards, simplifying the process of extracting and using web data.

How a Web Crawling API Simplifies Data Extraction

Web crawling APIs make extracting data much easier by offering pre-built tools and solutions. These tools take care of the technical challenges, providing organized, ready-to-use data for various applications.

Features of a Web Crawling API

WebCrawlerAPI, for example, simplifies the process with features like anti-bot measures and JavaScript rendering. It also includes automated proxy rotation and optimized requests to ensure smooth access to even the most complex websites.

The API supports various output formats, catering to different requirements:

| Output Format | Benefits |

|---|---|

| JSON | Simple to parse and manipulate data |

| HTML | Maintains the original structure and styling |

| Text | Provides clean content without any markup |

| Markdown | Keeps structure intact with lightweight formatting |

Scalability and Reliability of APIs

Scalability and reliability are key when dealing with large-scale data extraction. Web crawling APIs use distributed systems to handle high volumes of requests and ensure uptime with automated error handling and backup systems.

If a request fails, the API retries using alternative methods to retrieve the data. WebCrawlerAPI’s setup supports thousands of simultaneous requests while maintaining quality and speed. Plus, its pay-per-use model eliminates the need for businesses to worry about maintaining their own infrastructure.

Using WebCrawlerAPI: A Practical Example

Let's dive into how WebCrawlerAPI works with a straightforward example.

Code Example: Data Extraction with WebCrawlerAPI

Below is a Python script showcasing both basic and advanced ways to use WebCrawlerAPI:

import requests

api_key = "your_api_key"

url = "https://example.com"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json",

"User-Agent": "Mozilla/5.0"

}

params = {

"proxy": {"type": "residential", "country": "US"},

"wait_time": 2000

}

response = requests.get(f"https://api.webcrawlerapi.com/v1/crawl?url={url}",

headers=headers,

params=params)

if response.status_code == 200:

print(response.json())

else:

print("Failed to retrieve data")

This script sends a GET request to WebCrawlerAPI, and the response is returned in JSON format, making it simple to process and integrate.

Customizing API Requests

WebCrawlerAPI offers several options to tailor requests for specific needs, like using proxies or modifying headers. Here's a breakdown of the main parameters:

| Parameter | Purpose | Example Value |

|---|---|---|

| proxy | Define proxy type | {"type": "residential"} |

| headers | Mimic browser headers | {"User-Agent": "Mozilla/5.0"} |

| cookies | Manage sessions | {"sessionId": "abc123"} |

| wait_time | Add delay between requests | 2000 (milliseconds) |

These parameters are especially useful when dealing with complex websites. The API takes care of proxy management and request optimization, freeing developers to focus on analyzing the extracted data.

Fine-tuning these settings ensures precise and efficient data gathering, setting the stage for deeper exploration in the next section.

sbb-itb-ac346ed

Benefits of Using a Web Crawling API

Understanding how WebCrawlerAPI functions is one thing, but grasping the broader advantages of web crawling APIs can help developers, data scientists, and AI professionals make the most of these tools for reliable data extraction.

Efficiency and Scalability

Web crawling APIs make large-scale data extraction much faster and easier. Research by Oxylabs highlights that these tools can cut data extraction time by up to 90% compared to older scraping methods. They also allow users to manage multiple websites and process large amounts of data at the same time, all without overloading servers.

Reliability and Accuracy

These APIs rely on advanced algorithms to ensure consistent and precise results. With features like JavaScript rendering, anti-bot measures, and error handling, they provide dependable data extraction. Plus, their ability to meet specific project requirements adds an extra layer of usefulness.

Flexibility and Customization

Web crawling APIs offer options to customize requests, such as selecting output formats, configuring proxies, or adding custom headers. This makes them suitable for a wide range of applications, including gathering e-commerce data or aggregating content for AI training models, all while staying compliant with the terms of service of target websites.

Conclusion: Why Use a Web Crawling API?

Web crawling APIs have simplified data extraction, making it faster and more accessible for businesses and developers. They’ve changed how large-scale data collection is handled, offering a practical solution for complex tasks.

The key advantage of a web crawling API is its ability to automate and simplify data extraction. This reduces the need for manual work, allowing teams to focus on analyzing data and building applications instead [1][3].

For example, APIs like WebCrawlerAPI help businesses collect data efficiently. E-commerce platforms can gather competitor pricing information, and content aggregators can pull data from multiple sources seamlessly [5]. What once took weeks can now be done in just hours.

These APIs also tackle technical hurdles like JavaScript rendering and anti-bot defenses, all while staying compliant with website policies. They handle infrastructure management, so you don’t have to.

Web crawling APIs are scalable, adapting to your growing data needs without adding infrastructure headaches [3][2]. With automated and customizable data collection options, they’re an essential tool for any data-driven project.

FAQs

What is the difference between API and web scraping?

Web crawling APIs and web scraping both extract data from websites, but they do so in different ways. Here's a side-by-side comparison:

| Feature | Web Crawling API | Web Scraping |

|---|---|---|

| Access Method | Uses a structured interface | Extracts data directly from websites |

| Reliability | High, thanks to managed infrastructure | Can vary, depending on site changes |

| Compliance | Automatically follows website rules | Requires manual compliance setup |

| Scalability | Automatically scales as needed | Limited by your own infrastructure |

| Maintenance | Handled by the API provider | Requires frequent updates to keep up with changes |

APIs tackle challenges like rate limits, JavaScript rendering, and anti-bot defenses without requiring additional effort on your part. They’re designed to simplify the process for users, offering a more seamless experience.

On the other hand, traditional web scraping provides more flexibility but demands a high level of technical expertise. It also requires constant updates to adapt to changes in website structures [1][3]. Think of it like this: using an API is like taking a ride-sharing service - it saves time and effort compared to maintaining and driving your own car.

While web scraping tools can pull data from almost any publicly available site, APIs may have restrictions on what data they can access. However, APIs often provide a more stable and efficient way to get the information you need [4][5]. Understanding these differences can help you choose the right tool for your data extraction needs.