Table of Contents

- Quick Comparison

- Comparing Performance: Python vs Node.js

- Speed and Efficiency Analysis

- Scalability and Resource Use

- Library and Framework Overview

- Python Tools: Scrapy and BeautifulSoup

- Node.js Tools: Puppeteer and Cheerio

- Library Comparison Table

- Use Cases for Python and Node.js

- When to Use Python

- When to Use Node.js

- Using APIs for Web Crawling

- WebCrawlerAPI Features

- Why Use APIs?

- Conclusion

- Final Comparison: Python vs Node.js

- Key Takeaways

Python and Node.js are both excellent tools for web crawling, but the right choice depends on your project needs.

- Choose Python for large-scale data extraction, static websites, and tasks involving heavy data processing. Libraries like Scrapy and BeautifulSoup make it ideal for handling complex datasets.

- Opt for Node.js for real-time scraping, dynamic content, and JavaScript-heavy sites. Tools like Puppeteer and Cheerio excel in managing modern web applications.

Quick Comparison

| Feature | Python | Node.js |

|---|---|---|

| Performance | Slower execution; better for data-heavy tasks | Faster execution; great for real-time scraping |

| Best Libraries | Scrapy, BeautifulSoup | Puppeteer, Cheerio |

| Use Cases | Static websites, data analysis | JavaScript-heavy, dynamic websites |

| Learning Curve | Beginner-friendly | More complex for automation |

| Scalability | Easily scales across machines | Requires setup for scaling |

Key takeaway: Use Python for data-focused projects and Node.js for speed and dynamic content. APIs like WebCrawlerAPI can simplify tasks for both platforms.

Comparing Performance: Python vs Node.js

Performance differences between Python and Node.js play a key role in determining their suitability for web crawling tasks. Here's how they stack up.

Speed and Efficiency Analysis

Node.js, driven by the V8 engine, is faster in raw execution, making it ideal for quick data extraction and real-time tasks. Its event-driven architecture is great for managing multiple requests at once, making it perfect for lightweight, high-frequency crawls.

Python, while slower in execution, shines in its ability to handle complex data processing. This means that its speed limitations often don't create significant issues in practical web crawling scenarios.

Scalability and Resource Use

Both Python and Node.js bring unique strengths to large-scale web crawling, but their approaches differ:

| Aspect | Node.js Strength | Web Crawling Benefit |

|---|---|---|

| Memory Use | Non-blocking I/O | Manages many requests at once with less memory |

| CPU Efficiency | V8 engine | Handles parallel crawling tasks effectively |

Python, on the other hand, emphasizes processing large datasets efficiently. Although it may initially use more resources, its libraries are designed for handling complex tasks like data analysis and manipulation. This makes Python particularly useful for crawling projects that require heavy data processing.

For dynamic content scraping, Node.js's Puppeteer library can simulate a full browser. While effective, this approach demands more resources and time, especially for large-scale operations.

The choice between Python and Node.js ultimately depends on your project's needs. Node.js is better suited for real-time data extraction with minimal resource consumption, while Python is the go-to for projects involving detailed data manipulation and analysis tasks [1][4].

It's also important to consider the libraries and frameworks available for each language, as they significantly impact web crawling efficiency.

Library and Framework Overview

Choosing the right libraries and frameworks plays a crucial role in web crawling. These tools leverage Python's strong data processing abilities and Node.js's event-driven design.

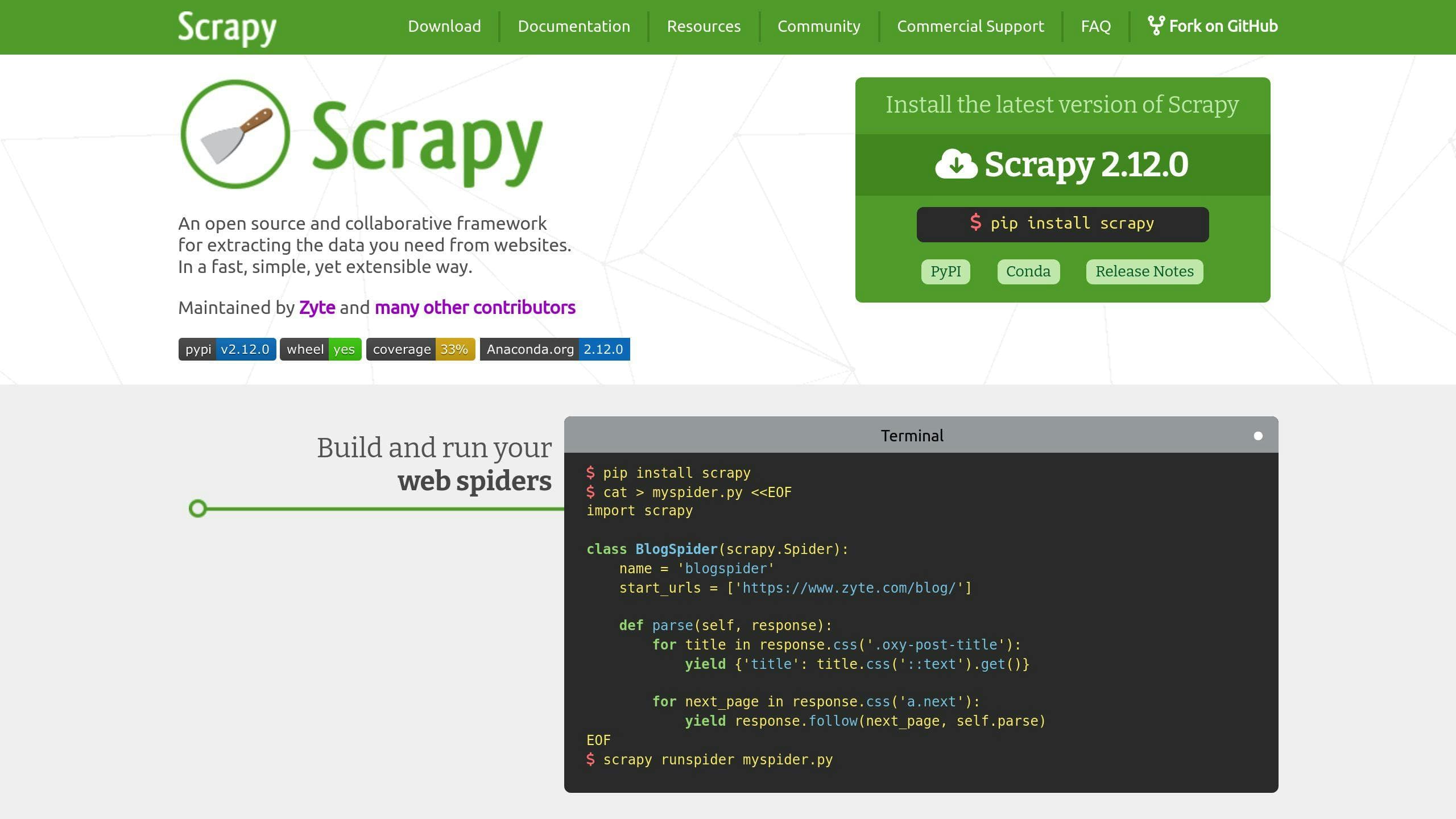

Python Tools: Scrapy and BeautifulSoup

Scrapy is built for large-scale web crawling, thanks to its efficient pipeline for automating data processing and storage. BeautifulSoup, on the other hand, specializes in parsing HTML and XML with an easy-to-use API, making it a perfect partner for Scrapy when you need precise data extraction.

Node.js Tools: Puppeteer and Cheerio

Puppeteer operates as a headless Chrome browser, making it ideal for handling JavaScript-heavy websites and automating tasks like form submissions. Cheerio, with its jQuery-like syntax, is perfect for simpler projects requiring lightweight HTML parsing.

Library Comparison Table

| Feature | Python Libraries | Node.js Libraries |

|---|---|---|

| Performance | Scrapy: Great for static content | Puppeteer: Ideal for dynamic content |

| Memory Usage | Scrapy: Optimized for large-scale use | Cheerio: Lightweight and efficient |

| Learning Curve | BeautifulSoup: Beginner-friendly | Puppeteer: More complex for automation |

| Best Use Case | Static or semi-dynamic sites | JavaScript-heavy, dynamic websites |

| Scalability | Easily scales across machines | Requires additional setup for scaling |

The right choice depends on your project needs. For example, Scrapy often outperforms when crawling static e-commerce sites due to its targeted approach. On the other hand, Puppeteer shines when working with modern single-page applications that rely heavily on JavaScript [2][1].

Many developers combine these tools for better results. A popular strategy is using BeautifulSoup for parsing alongside Scrapy for crawling, or pairing Cheerio with Puppeteer to handle different tasks within the same project [1][4].

Knowing the strengths of these libraries helps you select the best tool for your web crawling requirements.

Use Cases for Python and Node.js

Knowing when to pick Python or Node.js for web crawling can make or break your project. Each has its strengths, depending on the task at hand.

When to Use Python

Python is a go-to choice for projects involving heavy data extraction or analysis. Its rich library ecosystem is perfect for tasks that demand high throughput and advanced data processing.

Scrapy, for example, is highly efficient for large-scale crawling, capable of handling thousands of pages per minute when set up correctly. Python works best for:

- Data-heavy tasks: Combining web crawling with in-depth data analysis.

- Static websites: Great for crawling e-commerce catalogs or content-focused platforms.

- Scientific projects: Ideal for scenarios requiring both data collection and analysis.

- Large-scale crawling: Distributed crawlers running across multiple machines.

When to Use Node.js

Node.js stands out when dealing with modern, dynamic web applications. Its asynchronous nature makes it a strong contender for real-time data collection, especially on JavaScript-heavy websites.

Powered by the V8 JavaScript engine, Node.js performs exceptionally well in scenarios like:

- Dynamic content: Crawling single-page applications or JavaScript-rendered sites.

- Real-time tracking: Monitoring live price updates or inventory changes on e-commerce sites.

- Interactive scraping: Handling websites that need user interaction or form submissions.

- API-heavy projects: Managing multiple API integrations efficiently.

Your choice should align with your project's goals. Node.js is faster in certain tasks thanks to its V8 engine [3], while Python's extensive libraries and ease of use make it better suited for large-scale data-focused projects [5].

For example, if you're building a crawler to track real-time price updates on dynamic e-commerce sites, Node.js with Puppeteer is a solid pick. On the other hand, if you're pulling large datasets from static news websites for analysis, Python with Scrapy is the way to go.

Using APIs for Web Crawling

Python and Node.js come with powerful libraries for web crawling, but APIs offer a simpler way to handle complex tasks, making the process more efficient on both platforms.

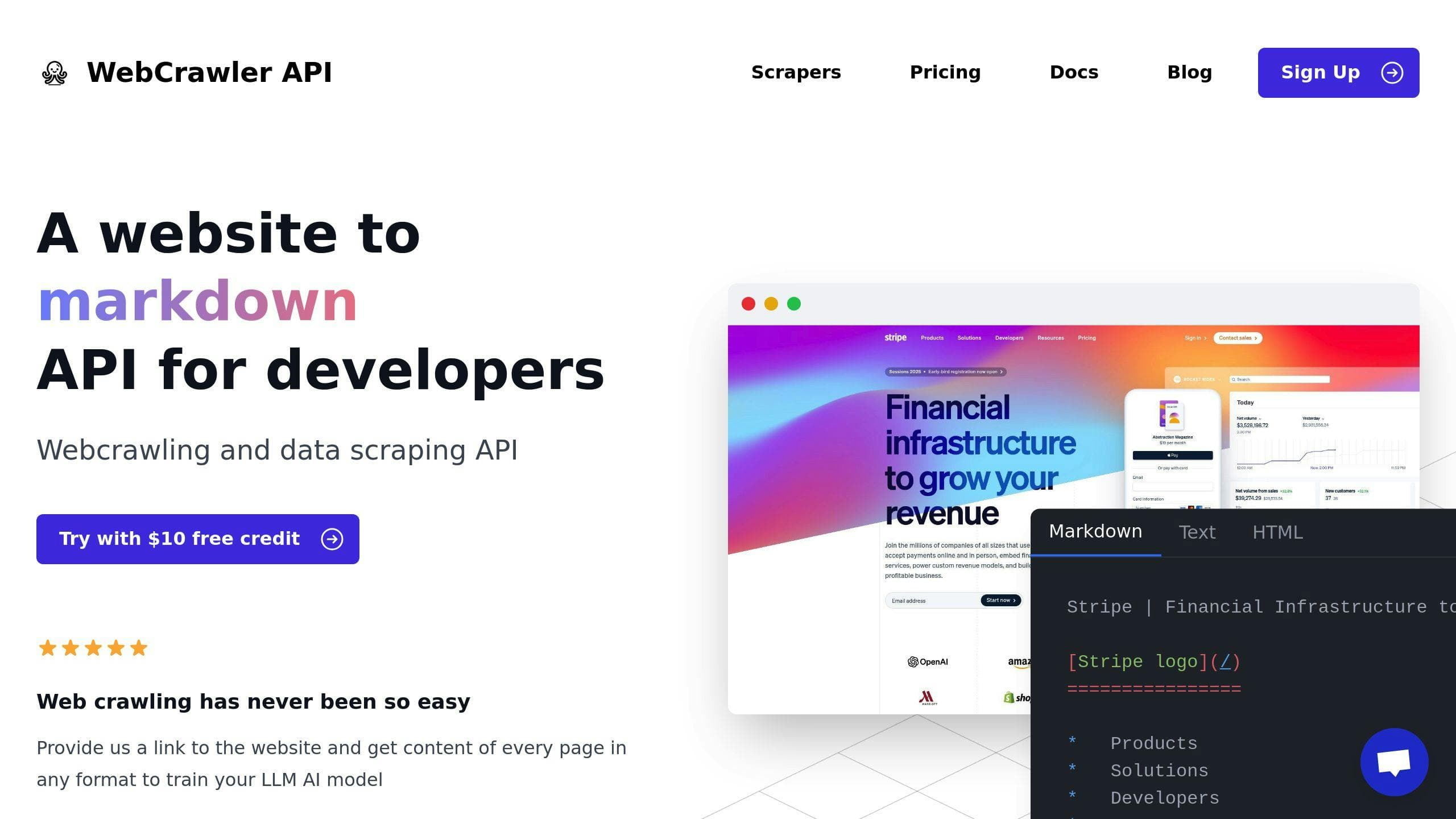

WebCrawlerAPI Features

WebCrawlerAPI works seamlessly with Python and Node.js, offering key features to optimize web crawling:

| Feature | Description |

|---|---|

| JavaScript Rendering | Automatically processes dynamic content loading |

| Anti-Bot Protection | Avoids CAPTCHAs and prevents IP blocking |

| Scalable Infrastructure | Handles large-scale crawling with ease |

You can integrate this API into Python projects using Scrapy or Node.js apps with Puppeteer without needing significant code changes [2].

Why Use APIs?

APIs simplify web crawling by tackling infrastructure challenges that would otherwise require additional tools in Python or Node.js.

Save Time and Resources: APIs take care of proxies, CAPTCHAs, and scaling, cutting down on development time and costs [1].

Simplified Data Handling: APIs come with features like:

- Automated JavaScript rendering and CAPTCHA solving

- IP rotation and proxy management

- Built-in tools for data parsing and cleaning

Works Across Platforms: APIs deliver consistent results in Python and Node.js, making them ideal for teams using both languages [2]. This ensures smooth workflows regardless of the programming environment.

Whether you’re leveraging Python for data analysis or Node.js for its event-driven model, APIs help you focus on extracting and analyzing data rather than dealing with infrastructure [1].

Conclusion

After comparing their performance, libraries, and use cases, here's how Python and Node.js stack up for web crawling.

Final Comparison: Python vs Node.js

Python and Node.js each bring different advantages to web crawling. Python is a strong choice for handling data-heavy projects, especially those involving large-scale data extraction and analysis. Libraries like Scrapy and BeautifulSoup are excellent for managing complex data extraction tasks.

On the other hand, Node.js shines when it comes to speed. Thanks to its V8 engine, it performs exceptionally well with dynamic content. Benchmark tests frequently show Node.js outpacing Python in raw execution speed, making it a great pick for performance-driven tasks [3].

Key Takeaways

The best choice depends on the specific needs of your project:

- Go with Python if you need: Tools for data-intensive tasks, large-scale data extraction, or seamless integration with data science workflows [1].

- Opt for Node.js if you need: Real-time scraping, handling dynamic content, or event-driven workflows [2].

APIs like WebCrawlerAPI can help tackle common web crawling challenges. They offer features like JavaScript rendering and anti-bot protection, and they work with both Python and Node.js [4].

Python's simple syntax makes it beginner-friendly, while Node.js offers more control for experienced developers. The right tool ultimately depends on your project's demands and goals.